- Matridyne Marketing

- No Comments

Transforming Healthcare Payers with LLMs: A Q1 2025 Roadmap to AI-Driven Efficiency

Introduction (Q1 2025 Perspective)

In a rapidly evolving landscape where healthcare payer organizations face both mounting pressures and fresh opportunities, we at Matridyne want to share our current viewpoint on how Large Language Models (LLMs) are poised to transform daily operations and strategic initiatives. This article is written for anyone working within or alongside healthcare payers—whether you’re on the ground floor, in mid-level leadership, or shaping high-level policy.

We recognize that the industry changes at an incredible pace and that each organization’s path will differ based on unique challenges and capabilities. The insights here reflect what we’ve learned through hands-on payer experience and ongoing analysis, but we welcome feedback and further discussion. Above all, we aim to spark pragmatic, step-by-step thinking about LLM adoption that can adapt as new capabilities and best practices continue to emerge.

Executive Summary

- AI Potential Confirmed

- Recent industry research (e.g., McKinsey) suggests that healthcare payers could realize 13–25% reductions in administrative costs, 5–11% in medical cost savings, and 3–12% in additional revenue by adopting advanced AI technologies. (https://www.mckinsey.com/industries/healthcare/our-insights/the-ai-opportunity-how-payers-can-capture-it-now#/)

- Matridyne’s on-the-ground engagements affirm this value, but we also see foundational readiness—data governance, IT architecture, organizational structure—as the make-or-break factor.

- Crawl–Walk–Run Strategy

We recommend a phased approach: first introduce a secure, general-purpose LLM tool for day-to-day tasks, then incrementally integrate key internal datasets, and finally tackle specialized payer workflows (claims, FWA, UM, etc.). - Consider Existing Vendor Platforms

For many payers, it may be more efficient to wait for core system vendors (claims, care management, CRM) to embed AI directly rather than build custom solutions. Those aiming to be early movers should consider co-development agreements with vendors or providers to mitigate risk and cost. - Broad Range of Use Cases

From improving member/provider customer service to automating repetitive tasks in project management, LLMs offer both quick wins and deeper, high-ROI transformations. We see neglected yet powerful opportunities like OCR/document management or code summaries in software dev. - Looking Ahead

As more advanced “thinking” LLMs and autonomous AI agents mature, payers will face new possibilities—from deeper research capabilities to self-directed claim auto-adjudication. For now, a measured approach ensures steady progress without overwhelming existing teams and infrastructures.

1. Where We Align with McKinsey – & Our Observations

1.1 The Upside Potential Is Real

McKinsey’s estimations of 13–25% administrative cost savings, 5–11% medical cost reduction, and 3–12% revenue uplift indicate that end-to-end AI integrations can deliver remarkable benefits. Matridyne’s own work aligns with this finding, especially in high-impact domains like:

- Claims: Real-time claim triage, advanced editing, and anomaly detection.

- Utilization & Care Management: Faster prior authorizations, better patient segmentation, and more accurate policy application.

- Network & Contracting: Optimized rates, proactive contract reviews, and improved payer–provider relationships.

1.2 Readiness Deficit: The Payers’ Common Challenge

Despite the potential, many payers lack modernized data systems, robust AI governance, or the cultural readiness to adopt new technologies. Matridyne’s field experience repeatedly reveals:

- Disparate Data: Fragmented or siloed data that hamper advanced analytics.

- Underdeveloped Operating Models: High turnover, limited process documentation, and poor cross-department collaboration.

- Minimal AI Literacy: Staff unfamiliar with how LLMs differ from legacy automation, leading to unrealistic expectations or slow adoption.

2. Crawl–Walk–Run: A Practical Adoption Framework

2.1 Crawl: Introduce a Broad LLM Tool to the Organization

- Secure, Company-Wide Rollout

- Deploy an enterprise-level LLM (e.g., Microsoft CoPilot or an on-premises solution).

- Conduct training for employees to understand the basic functions—like drafting emails, summarizing documents, or knowledge search.

- Foster Organic AI Learning

- Encourage experimentation and track usage analytics.

- Gather user stories to identify where LLM-based solutions can rapidly increase efficiency.

2.2 Walk: Incrementally Integrate Internal Datasets

- Target Select Knowledgebases

- HR policies, IT support docs, project archives, etc.

- Implement strict data governance, ensuring that only authorized users can access relevant data.

- Assess ROI & Expand

- If usage is high and benefits are clear, scale out to additional departments.

- Establish readiness for more specialized healthcare workflows.

2.3 Run: Target Healthcare-Specific Domains

- Customer Service & Member Engagement

Chatbots, IVR integrations, real-time agent assist—promote better satisfaction while reducing call center costs. - Claims and FWA

Combine LLM-driven OCR or document parsing with anomaly detection to streamline claims and reduce fraud. - Utilization Management

AI-driven prior authorization checks can shorten wait times and reduce manual errors.

3. Existing Vendor Platforms vs. Custom AI Solutions

3.1 Why Many Payers May Want to Wait

For payers reliant on established core platforms (e.g., claims administration, care management), waiting for vendors to incorporate AI may be more sustainable than patching in third-party or custom-built solutions. Over time, these vendors will likely offer native LLM modules that fit seamlessly into existing workflows.

3.2 Co-Develop if You’re and Early Mover

If your organization wants first-to-market AI advantages:

- Partner with Providers or Vendors

Co-develop new features to share the financial and technical risk. - Leverage Beta Programs

You might secure discounted licensing or priority functionality, with an understanding that final refinements will benefit from your real-world feedback.

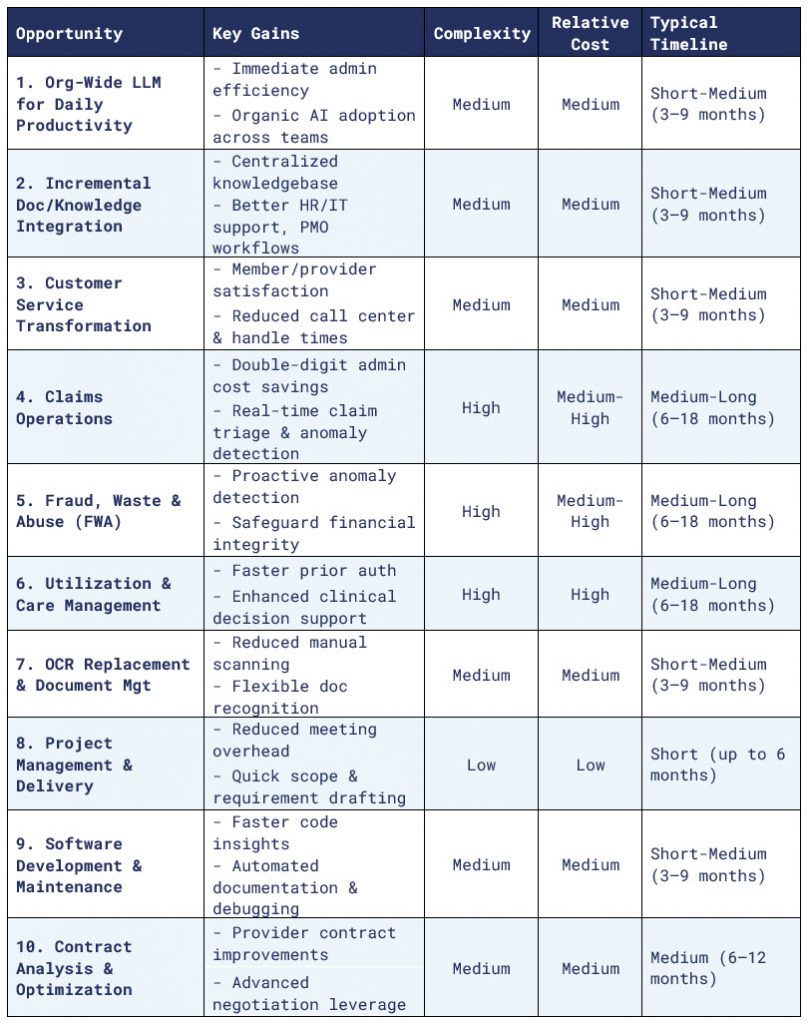

4. Comprehensive LLM Opportunity Table (Q1 2025)

5. Recommended Roadmap and Next Steps

- Conduct a Readiness Assessment

Enlist a neutral third-party consultant with hands-on payer and AI experience to evaluate your data security, organizational structure, and compliance posture. - Implement an Enterprise LLM “Baseline”

- Deploy a broad, secure LLM to build AI literacy organization-wide.

- Provide training and collect usage metrics to identify where the biggest gains lie.

- Leverage Existing Vendor Roadmaps

- Align your LLM timeline with the AI features your core vendors plan to release.

- If you have the bandwidth and appetite, propose co-development agreements that allow you to shape the solution—and reap early adopter benefits.

- Expand to High-Value Healthcare Functions

- Customer service remains a strong first step for specialized AI in payer operations.

- Over time, target claims, UM, and FWA once trust in AI is established internally.

- Continuously Update Governance

- Create (or refine) an AI/LLM strategy that spells out data handling, compliance, IT integration, and user training.

- Incorporate feedback loops to manage how staff adopt and adapt to new AI capabilities.

6. Conclusion & Future Outlook (Q1 2025)

As we enter 2025, LLM solutions for healthcare payers present an unprecedented chance to reduce costs, enhance member and provider experiences, and future-proof daily workflows. Though McKinsey and other analysts highlight the vast potential, we at Matridyne emphasize that organizational readiness is the critical linchpin. A stepwise “crawl–walk–run” approach offers a sustainable path, letting you gradually scale up from basic productivity gains to more transformative AI programs.

In the near future, more sophisticated “thinking” LLMs and agent-based automation will likely reshape how claims are adjudicated, how network adequacy is maintained, and how member engagement is managed. For now, the most effective strategy is a balanced rollout that addresses immediate opportunities, works in sync with vendor roadmaps, and invests in building a solid foundation for the exciting AI advances on the horizon.